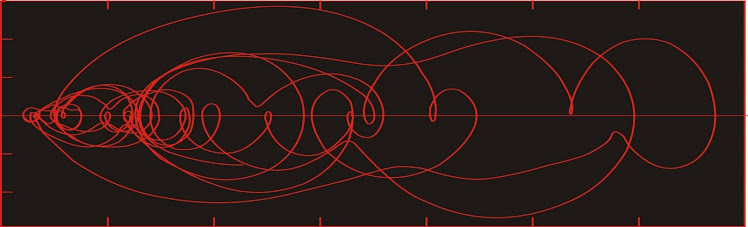

Dust flux, Vostok ice core

Two dimensional phase space reconstruction of dust flux from the Vostok core over the period 186-4 ka using the time derivative method. Dust flux on the x-axis, rate of change is on the y-axis. From Gipp (2001).

Saturday, July 30, 2011

Deconstructing algos, part 4: Phase space reconstructions of CNTY busted trades suggests high speed gang-bangs in the market

This summary is not available. Please

click here to view the post.

Thursday, July 28, 2011

Similarities between paleoclimate transfer function determination and statistical arbitrage

Paleoclimate transfer functions

Back in the day I was given the task of converting a set of programs from FORTRAN 66 to something that could be run on a PC. These programs were designed to use a wide variety of paleoclimatic indicator sets--in this case the relative abundances of 30 species of foraminifera, and known distributions of summer and winter temperature and salinities at the ocean surface over thousands of surface samples, and convert them into a transfer function which related the desired environmental parameters to the foraminiferal abundance data.

The basic idea is this: Summertime surface temperature (Ts) will be a function of the foraminiferal species abundance. If the abundances measured at a particular point were represented by the probability series p1, p2, p3, . . . , p30 (where Σp = 1), then an expression might be found as follows:

Ts = a1p1 + a2p2 + a3p3 + . . . + a30p30 + a31p1p1 + a32 p1p2 + . . . for a whole lot of terms. Assuming we used all first and second-order terms, we would have to develop 496 parameters in the above equation. That is rather a lot, particularly for the computers we were using when FORTRAN 66 was in vogue (well, okay, it was obsolete then--we were really using FORTRAN 77).

So instead of using all of the foraminiferal species abundance data, we would use factor analysis to simplify the data. Factor analysis is a bit of statistical wizardry which groups species which behave in a similar fashion together into a single factor. We would use the minimum number of factors to represent an acceptable amount of variance--in the case of transfer functions for the North Atlantic we reduced our thirty species to six factors. Our expression for temperature then becomes:

Ts = a1f1 + a2f2 + a3f3 + . . . + a6f6 + a7f1f1 + a8f1f2 + . . . +a27f6f6 + a28. Values for a1 to a28 were found by multiple regression. The PCs of the late 80's were capable of running such programs in a reasonable length of time.

Once all of the surface samples were run for the present day, you could look at the foraminifera found at different levels of a dated core. Let's say you have a sample from a level in a core known to be 100,000 years old. You count the numbers of the different species of foramifera, convert your observations into the factors determined above, and apply the factor loading scores to the transfer function, and you calculate the sea surface temperature at the site of your core 100,000 years ago.

As you might expect, there are a lot of things that can go wrong. The environmental preferences of one or more species might change on geological timescales. Different species might bloom during different times of the year, and this may also change due to evolutionary pressures or some confounding effect like iron seeding in coastal seas oceans due to variability in surface runoff. Nevertheless, the technique has been a mainstay of paleoclimatologists for about forty years.

Approaches to statistical arbitrage

The idea of statistical arbitrage is that there is a particular stock (or commodity or bond or what-have-you) which is mispriced relative to a model based on observations. This model would have the form of a transfer function as described above, but instead of using species abundance data, we use observed values of related financial data, including such things as the price of one or more indices, perhaps the unemployment rate, the inflation rate, the price of gold (or other commodities), and so on. Like in the transfer functions described above, having accurate financial data is critical (as opposed to manipulated "official" data sets).

There are at least two principal approaches to statistical arbitrage--1) concerning individual stocks (or commodities or bonds or whatever and 2) involves matched long-short trades between any number of stocks.

Instead of creating a transfer function between your observations and a particular stock price, you might find the ratio between the prices of two stocks. If the modeled ratio differs from the observed ratio, there may be an arbitrageable opportunity by going long the underpriced stock and short the overpriced one. Your assumption is that the dynamics of the ratio between the two prices has not changed so you plan to take advantage of the reversion to the mean. You don't know whether mean reversion will occur by the overpriced stock falling or the underpriced one rising, but the paired trade should work provided the relationship between the stocks does return to the mean.

Speed comes into play because it is an advantage to be the first market participant to recognize the arbitrage opportunity. As the world is now filled with algorithms seeking these opportunities they tend not to be available for long. One recent exception was in Canadian banks a few years ago, when two of the major banks were thought to be in trouble (and their dividend yields rose quite sharply as share prices fell)--there was a great trade in going long the high-yielding stocks and short the low-yielding stocks (on the assumption that in Canada there will not be a bank failure).

Many institutions calculate highly involved stat-arb positions involving matched long and short positions over large numbers of stocks. It can be difficult for a human to see how all the matches work. For human traders, stat arb probably works best in paired stocks, or a stock vs. an index.

For the record, I don't have any problem with algos pursuing stat arb. It seems to me to be an inherently fair process, and potentially it does improve liquidity if the under/overvaluation is driven by some sort of investor frenzy. I would have a problem if part of the strategy of the algo is to interfere with the access to information of market competitors by creating latency.

Back in the day I was given the task of converting a set of programs from FORTRAN 66 to something that could be run on a PC. These programs were designed to use a wide variety of paleoclimatic indicator sets--in this case the relative abundances of 30 species of foraminifera, and known distributions of summer and winter temperature and salinities at the ocean surface over thousands of surface samples, and convert them into a transfer function which related the desired environmental parameters to the foraminiferal abundance data.

This really brings back memories.

The basic idea is this: Summertime surface temperature (Ts) will be a function of the foraminiferal species abundance. If the abundances measured at a particular point were represented by the probability series p1, p2, p3, . . . , p30 (where Σp = 1), then an expression might be found as follows:

Ts = a1p1 + a2p2 + a3p3 + . . . + a30p30 + a31p1p1 + a32 p1p2 + . . . for a whole lot of terms. Assuming we used all first and second-order terms, we would have to develop 496 parameters in the above equation. That is rather a lot, particularly for the computers we were using when FORTRAN 66 was in vogue (well, okay, it was obsolete then--we were really using FORTRAN 77).

So instead of using all of the foraminiferal species abundance data, we would use factor analysis to simplify the data. Factor analysis is a bit of statistical wizardry which groups species which behave in a similar fashion together into a single factor. We would use the minimum number of factors to represent an acceptable amount of variance--in the case of transfer functions for the North Atlantic we reduced our thirty species to six factors. Our expression for temperature then becomes:

Ts = a1f1 + a2f2 + a3f3 + . . . + a6f6 + a7f1f1 + a8f1f2 + . . . +a27f6f6 + a28. Values for a1 to a28 were found by multiple regression. The PCs of the late 80's were capable of running such programs in a reasonable length of time.

Once all of the surface samples were run for the present day, you could look at the foraminifera found at different levels of a dated core. Let's say you have a sample from a level in a core known to be 100,000 years old. You count the numbers of the different species of foramifera, convert your observations into the factors determined above, and apply the factor loading scores to the transfer function, and you calculate the sea surface temperature at the site of your core 100,000 years ago.

As you might expect, there are a lot of things that can go wrong. The environmental preferences of one or more species might change on geological timescales. Different species might bloom during different times of the year, and this may also change due to evolutionary pressures or some confounding effect like iron seeding in coastal seas oceans due to variability in surface runoff. Nevertheless, the technique has been a mainstay of paleoclimatologists for about forty years.

Approaches to statistical arbitrage

The idea of statistical arbitrage is that there is a particular stock (or commodity or bond or what-have-you) which is mispriced relative to a model based on observations. This model would have the form of a transfer function as described above, but instead of using species abundance data, we use observed values of related financial data, including such things as the price of one or more indices, perhaps the unemployment rate, the inflation rate, the price of gold (or other commodities), and so on. Like in the transfer functions described above, having accurate financial data is critical (as opposed to manipulated "official" data sets).

There are at least two principal approaches to statistical arbitrage--1) concerning individual stocks (or commodities or bonds or whatever and 2) involves matched long-short trades between any number of stocks.

Instead of creating a transfer function between your observations and a particular stock price, you might find the ratio between the prices of two stocks. If the modeled ratio differs from the observed ratio, there may be an arbitrageable opportunity by going long the underpriced stock and short the overpriced one. Your assumption is that the dynamics of the ratio between the two prices has not changed so you plan to take advantage of the reversion to the mean. You don't know whether mean reversion will occur by the overpriced stock falling or the underpriced one rising, but the paired trade should work provided the relationship between the stocks does return to the mean.

Speed comes into play because it is an advantage to be the first market participant to recognize the arbitrage opportunity. As the world is now filled with algorithms seeking these opportunities they tend not to be available for long. One recent exception was in Canadian banks a few years ago, when two of the major banks were thought to be in trouble (and their dividend yields rose quite sharply as share prices fell)--there was a great trade in going long the high-yielding stocks and short the low-yielding stocks (on the assumption that in Canada there will not be a bank failure).

Many institutions calculate highly involved stat-arb positions involving matched long and short positions over large numbers of stocks. It can be difficult for a human to see how all the matches work. For human traders, stat arb probably works best in paired stocks, or a stock vs. an index.

For the record, I don't have any problem with algos pursuing stat arb. It seems to me to be an inherently fair process, and potentially it does improve liquidity if the under/overvaluation is driven by some sort of investor frenzy. I would have a problem if part of the strategy of the algo is to interfere with the access to information of market competitors by creating latency.

Tuesday, July 26, 2011

Feces-throwing primates rule the world!

As humans have a primate origin, sometimes we can gain valuable insight into human behaviour by studying primates. For instance, primates are known for throwing shit at things when we are angry.

It is true we don't literally do this anymore. Well, not often. But it is still embedded within our psychology. In our language--we might say, "my boss just shit all over me after presentation," or perhaps, "I'm tired of all your crap", or even, "let's go over there and beat the shit out of them!".

I find it terribly amusing and a little frightening to consider that psychologically we do not much differ from those little monkeys up in the trees throwing shit at each other.

Only we have rockets and nuclear weapons.

In fact we can view the history of warfare as the development of better and better means of throwing shit. The relentless march of technological progress in warfare has been driven by the need to improve three parameters--1) range; 2) accuracy; 3) explosive yield.

Over the years we have progressed from the invention of the catapult to the ultimate dream of primate-kind--brave American technicians sitting in bunkers in Nevada hurling shit at unsuspecting Afghan villagers by remote control. Intercontinental shit-throwing!

We've even managed to hurl some of our shit right out of the solar system!

The war policy of the western powers ever since WWII has been predicated around throwing large volumes of shit from aircraft. Victory through air power!

Viewing some of our modern political problems through the prism of our primate past may provide an interesting perspective.

There is currently considerable consternation over the Iranian regime developing the ability to hurl shit 2,000 km. Why a country that can hurl shit right out of the solar system feels this is a problem is unclear.

There is a lot of noise coming from Israel, which is close enough to be struck by the Iranian shit-flinging devices. But they have tremendous retaliatory capacity--enough to completely bury Iran. Nevertheless they endlessly lobby the US to ensure that American shit will be added to Israeli shit in an attack on Iran.

Meanwhile, the shit-throwing continues in Libya.

Over the years we have progressed from the invention of the catapult to the ultimate dream of primate-kind--brave American technicians sitting in bunkers in Nevada hurling shit at unsuspecting Afghan villagers by remote control. Intercontinental shit-throwing!

We've even managed to hurl some of our shit right out of the solar system!

The war policy of the western powers ever since WWII has been predicated around throwing large volumes of shit from aircraft. Victory through air power!

Viewing some of our modern political problems through the prism of our primate past may provide an interesting perspective.

There is currently considerable consternation over the Iranian regime developing the ability to hurl shit 2,000 km. Why a country that can hurl shit right out of the solar system feels this is a problem is unclear.

There is a lot of noise coming from Israel, which is close enough to be struck by the Iranian shit-flinging devices. But they have tremendous retaliatory capacity--enough to completely bury Iran. Nevertheless they endlessly lobby the US to ensure that American shit will be added to Israeli shit in an attack on Iran.

Meanwhile, the shit-throwing continues in Libya.

"As of March 20, Royal Canadian Armed Forces have been ordered to begin

flinging poo at forces loyal to Colonel Qadaffi."

Thursday, July 21, 2011

The World Complex welcomes new readers

This article has attracted a little attention from somebody taking a break from the debt-ceiling debate.

Edit: Perhaps they're just looking for a place to invest their hard-earned cash.

Monday, July 18, 2011

HFT hits the housing market

The short-sale fraud in housing looks a lot like HFT only on a longer timeframe. It makes use of the same phenomenon--the asymmetry in available information.

The way it works is simplicity itself.

For those who say this is all fair--and that anyone is capable of acquiring the software and hardware (or alternatively, that anyone who has gone to all that expense deserves the profit), please note that similar behaviour in the housing market (where it is banks who suffer) is fraud, so therefore . . .

The way it works is simplicity itself.

The scam artists, usually real estate agents, will secure a legitimate bid on a home, one where the borrower owes far more on the mortgage than the home is worth. Then they arrange for an accomplice investor to make a lower offer on the home.

The agent then presents the lower bid to the lender and asks them to forgive any remaining balance owed -- without disclosing that there was a higher bid made on the home. Once the short sale is approved, the scammer then sells the home to the higher bidder, often on the same day.At heart this is what latency arbitrage is all about. Delay price quotes and get your counterparty a poor price while you know better ones are available.

For those who say this is all fair--and that anyone is capable of acquiring the software and hardware (or alternatively, that anyone who has gone to all that expense deserves the profit), please note that similar behaviour in the housing market (where it is banks who suffer) is fraud, so therefore . . .

Labels:

HFT

Sunday, July 17, 2011

FIAT: The Legacy (or The Redemption of Ben Bernanke)

One of the most eagerly awaited movies of all time!

FIAT: The Legacy is set in a dark, dystopic time--a time in which central bankers exert perfect control over the economy under the leadership of The Benbernank, which is enforced by FIAT, the weapon of the bankers.

Citizens are subject to draft for the regimes many wars. Our hero, Sam, is captured in one such sweep and almost before he knows it is engaged in a series of senseless conflicts on a digital battlefield.

It turns out that the battles are being fought for the amusement of the ruler of this bleak place--a being called "The Benbernank".

Sam mistakes The Benbernank for Dr. Ben Bernanke, a central figure in the creation of the economic system. The Benbernank sentences our hero to death by combat. He is about to die at the hands of FIAT when he is unexpectedly rescued and escapes to a hidden fortress near the edge of the dystopic world--where the real Dr. Ben Bernanke has been hiding since he was overthrown by The Benbernank.

Dr. Bernanke greets our hero and falls into a regretful reverie of the past. He came to this world and found a blank economic slate, and he vowed to create a perfect system using Keynesian economics. He created The Benbernank as a kind of doppelganger associate to help create that system; and FIAT, who was to fight in service of the people.

Initially, things went well, and prices and values came under control of central authority.

The biggest support for the centralization of the economy was their ability to create not only money, but consumables and capital equipment, including such things as motorcycles and aircraft (shown above) out of nothing.

Then one day--the betrayal.

Dr. Bernanke was forced to flee for his life as The Benbernank rebelled and took control of the system. FIAT was corrupted and became a tool of the bankers. The system immediately turned into a repressive system of total price control. Labour was devalued to increase the profits of the bankers. And the people were reduced to consuming one another.

This piece of eye-candy has no meaningful role in the story.

Dr. Bernanke's assistant, Quota, shows Sam around Dr. Bernanke's compound. A trip to the library shows us that Dr. Bernanke has been reading Austrian Economics over the past decades in his attempt to understand what went wrong.

Dr. Bernanke's meditations have led him to the solution. They must replace the corrupted system of fiat currency with hard currency based on silver. They plan to get to the comm tower to launch the new silver eagle--and for the final battle against the bankers.

The bankers are on the verge of victory when FIAT has a flashback to the days when he served the people--and in one last desperate (self-destructive) act of heroism, foils the bankers attack.

Our heroes reach the comm tower where they encounter The Benbernank. At last the creation faces his creator. And he has some things to say.

The Benbernank: I did everything you wanted me to! I tried to use Keynesian economics to create the perfect system! I elevated Keynesian economics to its maximum potential! And yet . . . this world is dark and terrible . . . you promised it would work! You promised to help to make the system perfect! But instead, you turned your back on it . . . and me . . .

Dr. Bernanke: I know . . . I did, and I'm sorry. It's just that . . . perfection is impossible to reach through Keynesianism. It can only unfold through free and unfettered human ambition. And you can't know that because I didn't know it when I created you . . .I'm sorry . . . ending the system is the only way forward . . .

The first silver eagle enters circulation . . . and the digital economy implodes!

A world of real prices . . . real values . . . real ethics . . .

FIAT: The Legacy is set in a dark, dystopic time--a time in which central bankers exert perfect control over the economy under the leadership of The Benbernank, which is enforced by FIAT, the weapon of the bankers.

Citizens are subject to draft for the regimes many wars. Our hero, Sam, is captured in one such sweep and almost before he knows it is engaged in a series of senseless conflicts on a digital battlefield.

It turns out that the battles are being fought for the amusement of the ruler of this bleak place--a being called "The Benbernank".

Sam mistakes The Benbernank for Dr. Ben Bernanke, a central figure in the creation of the economic system. The Benbernank sentences our hero to death by combat. He is about to die at the hands of FIAT when he is unexpectedly rescued and escapes to a hidden fortress near the edge of the dystopic world--where the real Dr. Ben Bernanke has been hiding since he was overthrown by The Benbernank.

Dr. Bernanke greets our hero and falls into a regretful reverie of the past. He came to this world and found a blank economic slate, and he vowed to create a perfect system using Keynesian economics. He created The Benbernank as a kind of doppelganger associate to help create that system; and FIAT, who was to fight in service of the people.

The Benbernank, Dr. Bernanke, and FIAT prepare to create the perfect Keynesian system.

Initially, things went well, and prices and values came under control of central authority.

The biggest support for the centralization of the economy was their ability to create not only money, but consumables and capital equipment, including such things as motorcycles and aircraft (shown above) out of nothing.

Then one day--the betrayal.

The Benbernank: "Do you still want me to create the perfect economic system?"

Dr. Bernanke was forced to flee for his life as The Benbernank rebelled and took control of the system. FIAT was corrupted and became a tool of the bankers. The system immediately turned into a repressive system of total price control. Labour was devalued to increase the profits of the bankers. And the people were reduced to consuming one another.

The Benbernank addresses the legions.

"I have freed you from the tyranny of the labourers and producers of society!

Under me, we control prices! We control values! We control FIAT!"

This piece of eye-candy has no meaningful role in the story.

Dr. Bernanke meditates on Hayek.

Dr. Bernanke's assistant, Quota, shows Sam around Dr. Bernanke's compound. A trip to the library shows us that Dr. Bernanke has been reading Austrian Economics over the past decades in his attempt to understand what went wrong.

"So he finally read Rothbard, von Mises, and Hayek."

"Human Action is my favourite!."

Dr. Bernanke's meditations have led him to the solution. They must replace the corrupted system of fiat currency with hard currency based on silver. They plan to get to the comm tower to launch the new silver eagle--and for the final battle against the bankers.

The bankers attack.

But as word spreads of the impending launch of the silver eagles by Dr. Bernanke et al., the people rise up against the bankers. Melees break out throughout the dystopic world, even as the bankers close in on our heroes.

The bankers try everything they can to break the silver longs. They increase the margin requirements, change the rules to discourage taking delivery, but none of them work. So they resort to shooting.

The bankers battle the silver longs.

The bankers are on the verge of victory when FIAT has a flashback to the days when he served the people--and in one last desperate (self-destructive) act of heroism, foils the bankers attack.

FIAT switches sides.

Our heroes reach the comm tower where they encounter The Benbernank. At last the creation faces his creator. And he has some things to say.

The Benbernank: I did everything you wanted me to! I tried to use Keynesian economics to create the perfect system! I elevated Keynesian economics to its maximum potential! And yet . . . this world is dark and terrible . . . you promised it would work! You promised to help to make the system perfect! But instead, you turned your back on it . . . and me . . .

Dr. Bernanke: I know . . . I did, and I'm sorry. It's just that . . . perfection is impossible to reach through Keynesianism. It can only unfold through free and unfettered human ambition. And you can't know that because I didn't know it when I created you . . .I'm sorry . . . ending the system is the only way forward . . .

The first silver eagle enters circulation . . . and the digital economy implodes!

Our heroes find themselves in the real world.

A world of real prices . . . real values . . . real ethics . . .

Thursday, July 14, 2011

One year later

The first year has been pretty successful. The 10,000th page view came in yesterday.

Up until now I have been scratching out ideas for studying dynamic systems. My objectives for the coming year are to convince you that certain dynamic systems (global climate, for instance) are capable of showing innovation--that is, they can develop new forms of behaviour. I will try to apply some of the previously developed quantitative tools to HFT algos (which also show behavioural change) in the ongoing deconstructing algos series.

I'm heading back to Africa next month so will be back providing occasional updates on the unfolding situation in West Africa. And I may review a book or two.

Up until now I have been scratching out ideas for studying dynamic systems. My objectives for the coming year are to convince you that certain dynamic systems (global climate, for instance) are capable of showing innovation--that is, they can develop new forms of behaviour. I will try to apply some of the previously developed quantitative tools to HFT algos (which also show behavioural change) in the ongoing deconstructing algos series.

I'm heading back to Africa next month so will be back providing occasional updates on the unfolding situation in West Africa. And I may review a book or two.

Monday, July 11, 2011

More evolutionary adventures in HFT

The version of my recent post that appeared on Zero Hedge had this article from Nanex appended to it.

I don't disagree with the gist of the Nanex article and freely admit that they have far more experience in these matters than do I--furthermore, they have more timely access to much better data and have more computation power than I command. I point all this out simply to say that I do not claim to have written that portion of the post--the decision to append it to my article was made by the operators of Zero Hedge. Their website--their decision.

Now about that Nanex article . . .

CQS is on the losing side of history. The ability to increase the ability of hardware to deal with a volume of information pales in comparison to the ability to generate more noise by digital means. In fact, given the rates of increase proposed by CQS (33% last week, another 25% in October), I would guess that CQS is increasing their capacity several orders of magnitude more slowly than the rate at which algo traders can increase their data transmission.

From Nanex:

Obviously #3 is the case. The algo traders are merely toying with the authorities. No matter how much they increase the capacity of the lines, the algo traders have the ability to immediately saturate them and create latency on demand.

The only possible fix is placing some sort of limit on the life of an offer.

I don't disagree with the gist of the Nanex article and freely admit that they have far more experience in these matters than do I--furthermore, they have more timely access to much better data and have more computation power than I command. I point all this out simply to say that I do not claim to have written that portion of the post--the decision to append it to my article was made by the operators of Zero Hedge. Their website--their decision.

Now about that Nanex article . . .

CQS is on the losing side of history. The ability to increase the ability of hardware to deal with a volume of information pales in comparison to the ability to generate more noise by digital means. In fact, given the rates of increase proposed by CQS (33% last week, another 25% in October), I would guess that CQS is increasing their capacity several orders of magnitude more slowly than the rate at which algo traders can increase their data transmission.

From Nanex:

The first chart shows one micro-burst of activity as it occurred on July 5th, 2011, which is the first day after CQS capacity was increased by 33% to 1 million quotes/second. The second chart shows how this same data might look if we imposed the capacity limits that were in effect prior to this trading day.

As you can see, the delay is significant. If this activity level occurred using the limits that existed on July 1st, the delay would have lasted approximately 200ms. We came up with three possible explanations:

1. July 5th just happened to be 33% more active than any trading day in history.2. The capacity limits from before July 5th were at least 33% too low.3. An algo is testing how much more quote noise it needs to generate to cause the same effect as before.

Obviously #3 is the case. The algo traders are merely toying with the authorities. No matter how much they increase the capacity of the lines, the algo traders have the ability to immediately saturate them and create latency on demand.

The only possible fix is placing some sort of limit on the life of an offer.

Friday, July 8, 2011

Deconstructing algos 3: Quote stuffing as a means of restoring arbitrageable latency

In a recent article Nanex has shown that quote stuffing can slow down the updating of series of stock prices, bids and asks. The article was less clear about why one might do that. There could be arbitraging opportunities.

One of the first games these clowns got into was latency arbitrage. HFTer offers a number of shares for sale at one price, and at the first sign of interest, pulls all of the offers and resubmits them at a higher price. The latency comes into play because as another player send his orders in to fill HFTer, and these orders all find their ways to the market via differing routes, each of which has a different latency (lag time)--so instead of all arriving at once, they arrive singly, giving HFTer time to pull the rest of his bids.

Early this year, Royal Bank of Canada (RBC) launched a new trading program called Thor, which was designed to avoid latency arbitrage. The gist of the program was that the system would monitor the various latencies to all the different exchanges to which orders would be routed, and artificially delayed the submission along the fastest route so that all the orders would arrive simultaneously on all exchanges. While perfection did not occur, the early claims were that the various latency would me measured in microseconds, which at the time seemed reasonable.

Presumably RBC is not the only player that has developed such software.

Now we hear that orders are being stuffed down different channels at such speeds as to change the latencies. In the Nanex article we see:

Saturating the quotes on individual lines will change the time lags (latency factor) during the intervals the quotes are generated. For Thor to work properly, it has to estimate by observation the precise lag between sending an order and having it arrive on each market. Randomly changing the lags for the different lines would confound RBC's (and others) attempts at ensuring all its orders arrive on all markets at the same time.

The quote stuffing in this case is intended to be noise, and its intent is to give the latency arbitrageurs the upper hand, as it is easier to generate an immense amount of random noise than it is to formulate an anticipatory response to it in real (microsecond) time.

The only approach I can see (if this is possible) is some kind of all-or-nothing fill on your orders. So your orders arrive on the different markets at slightly different times, but they aren't triggered until they are all ready and then they trigger at the same time. Of course the arbitrageur is probably also the "market maker" and can see you trying to match up your orders prior to execution, leaving you up shit creek in any case.

I really can't see an argument for these actions providing liquidity.

One of the first games these clowns got into was latency arbitrage. HFTer offers a number of shares for sale at one price, and at the first sign of interest, pulls all of the offers and resubmits them at a higher price. The latency comes into play because as another player send his orders in to fill HFTer, and these orders all find their ways to the market via differing routes, each of which has a different latency (lag time)--so instead of all arriving at once, they arrive singly, giving HFTer time to pull the rest of his bids.

Early this year, Royal Bank of Canada (RBC) launched a new trading program called Thor, which was designed to avoid latency arbitrage. The gist of the program was that the system would monitor the various latencies to all the different exchanges to which orders would be routed, and artificially delayed the submission along the fastest route so that all the orders would arrive simultaneously on all exchanges. While perfection did not occur, the early claims were that the various latency would me measured in microseconds, which at the time seemed reasonable.

Presumably RBC is not the only player that has developed such software.

Now we hear that orders are being stuffed down different channels at such speeds as to change the latencies. In the Nanex article we see:

Today (June 28, 2011) between 10:35 and 11:17, algorithms running on multiple option exchanges (6 or more), drove excessively high quote rates for SPY options (and 2 or three other symbols that I haven't identified yet). Fortunately this was a quiet trading period. A total of about 400,000,000 excessive quotes were generated -- that is, compared and scaled to the previous day. In one 100ms period, 2,000 SPY option contracts had about 16,000 fluttering quotes (some combination of nominal changes in bid, bid size, ask, ask size) resulting in saturating/delaying all SPY options on that line. These events occurred several times per minute during the interval. If these algorithms include more symbols, or if they run again during an active market, we will see severe problems. It is shocking to see this so widely distributed across so many exchanges and contracts simultaneously.I'm pretty sure this is not intended to be damaging to the market. The fact that it runs for short bursts on limited channels suggests that there is a particular target. A target like Thor.

Saturating the quotes on individual lines will change the time lags (latency factor) during the intervals the quotes are generated. For Thor to work properly, it has to estimate by observation the precise lag between sending an order and having it arrive on each market. Randomly changing the lags for the different lines would confound RBC's (and others) attempts at ensuring all its orders arrive on all markets at the same time.

The quote stuffing in this case is intended to be noise, and its intent is to give the latency arbitrageurs the upper hand, as it is easier to generate an immense amount of random noise than it is to formulate an anticipatory response to it in real (microsecond) time.

The only approach I can see (if this is possible) is some kind of all-or-nothing fill on your orders. So your orders arrive on the different markets at slightly different times, but they aren't triggered until they are all ready and then they trigger at the same time. Of course the arbitrageur is probably also the "market maker" and can see you trying to match up your orders prior to execution, leaving you up shit creek in any case.

I really can't see an argument for these actions providing liquidity.

Thursday, July 7, 2011

Information theoretic approaches to characterizing complex systems, part 3: optimizing the window for constructing probability density of state space trajectories

In earlier essays I discussed creating plots of the probability density of a 2-d reconstructed phase space portrait as a means for investigating the dynamics of complex systems. For examples I used proxy records representing global ice volume, Himalayan paleomonsoon strength, and oceanographic conditions.

Today's discussion centres around using entropy (in an information theoretic sense) as a tool for deciding whether the window selected for calculating the probability density is sufficient.

We've seen this before. The probability density plot of the trajectory of the 2-d phase space portrait for one 270-ky window of the paleomonsoon strength proxy data suggests that the phase space is characterized by five areas of high probability--five areas where the trajectory of the phase space tends to be confined in small areas for episodes of time before rapidly moving towards another such area. In earlier articles we have argued that these represent areas of Lyapunov stability (LSA).

I used a 270-ky window for all the plots. Why this number? Why any number?

Selection of the width of the window is important, and at the time I was creating these plots, I did not have a technique in place for prescribing this width. If the window is wide, resolving power is lower. If the window is narrow, resolving power is higher--consequently one would think to choose a narrow (i.e. short length of time) window. But if the window is too short, then the observed trajectory over the window is not representative of the phase space of the function.

If we constructed probability-density plots from the two trajectories in the diagram above, the plots would not be very interesting. It would be even worse if the windows were shorter--say 1 ky, for instance. Then each successive probability density plot would be a blob translated some short distance in phase space, tracing out the trajectory of the entire function, alternating between being stretched a little and "snapping back".

Using entropy to prescribe window length

Entropy will be inversely related to the size of the region of phase space that encompasses the trajectory within the window of time under investigation. If the phase space travels from one LSA to another, then the phase space is characterized by alternating episodes of confinement to a small area punctuated by sweeping trajectories as the system moves to a new state of metastability. A graph of the entropy of successive windows of probability data would appear very noisy, as the entropy varies from very low values for windows dominated by confinement to one LSA, to much higher values during the shifts from one LSA to another.

As the window is lengthened, the noise level of the graph of successive values of entropy declines.

The plot here seems to suggest that I can get by using a window of only 150 ky, rather than the 270 ky that I actually used.

The story for the trajectories in the Late Quaternary is the same--the minimum window width looks to be about 150 ky.

You'll have to excuse me. I have a lot of redrafting to do.

A more formal treatment of this method is given here.

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

Note: "ky" means thousand years, and refers to duration

"ka" means thousand years ago and refers to a specific time

Today's discussion centres around using entropy (in an information theoretic sense) as a tool for deciding whether the window selected for calculating the probability density is sufficient.

We've seen this before. The probability density plot of the trajectory of the 2-d phase space portrait for one 270-ky window of the paleomonsoon strength proxy data suggests that the phase space is characterized by five areas of high probability--five areas where the trajectory of the phase space tends to be confined in small areas for episodes of time before rapidly moving towards another such area. In earlier articles we have argued that these represent areas of Lyapunov stability (LSA).

I used a 270-ky window for all the plots. Why this number? Why any number?

Selection of the width of the window is important, and at the time I was creating these plots, I did not have a technique in place for prescribing this width. If the window is wide, resolving power is lower. If the window is narrow, resolving power is higher--consequently one would think to choose a narrow (i.e. short length of time) window. But if the window is too short, then the observed trajectory over the window is not representative of the phase space of the function.

The trajectories from two neighbouring 30-ky windows in phase space.

If we constructed probability-density plots from the two trajectories in the diagram above, the plots would not be very interesting. It would be even worse if the windows were shorter--say 1 ky, for instance. Then each successive probability density plot would be a blob translated some short distance in phase space, tracing out the trajectory of the entire function, alternating between being stretched a little and "snapping back".

Using entropy to prescribe window length

Entropy will be inversely related to the size of the region of phase space that encompasses the trajectory within the window of time under investigation. If the phase space travels from one LSA to another, then the phase space is characterized by alternating episodes of confinement to a small area punctuated by sweeping trajectories as the system moves to a new state of metastability. A graph of the entropy of successive windows of probability data would appear very noisy, as the entropy varies from very low values for windows dominated by confinement to one LSA, to much higher values during the shifts from one LSA to another.

As the window is lengthened, the noise level of the graph of successive values of entropy declines.

The diagram above is a series of graphs of successive values of entropy calculated over overlapping windows of length 30 ky (at top), 60 ky, 90 ky, 120 ky, and 150 ky (at bottom) using the calculated probability density of the 2-dimensional phase space reconstructed from the time series of the paleomonsoon strength proxy, during the early Quaternary. As the window lengthens, we see the plot become smoother until it reflects primarily secular changes rather than accidents of local trajectories.

The plot here seems to suggest that I can get by using a window of only 150 ky, rather than the 270 ky that I actually used.

You'll have to excuse me. I have a lot of redrafting to do.

A more formal treatment of this method is given here.

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

Note: "ky" means thousand years, and refers to duration

"ka" means thousand years ago and refers to a specific time

Tuesday, July 5, 2011

The evolutionary arms race in the realm of HFT algos

The history of life is littered with abundant evidence for evolutionary arms races, by which one (or one group) of organism(s) develops some advantage over competitors, predators, or prey, which is soon after countered by the disadvantaged group. The dance has continued from the earliest times of life until the present, and is presumed to be ongoing. Indeed, it is one of the central selective pressures effecting evolution--by eliminating the losers of the arms race.

As I am not an evolutionary biologist, I was thinking in particular of asymmetric races, in which competing organisms adopt different methods, rather than symmetric races.

My interest in such things stems from having a son (and many, many relatives) with G6PD deficiency, a relatively common enzyme deficiency--selected for in humans most likely because it confers some resistance to malaria. The chief drawback of this genetic condition is that eating certain foods (and medications) can cause massive destruction of red blood cells.

How does such a condition appear? Like most genetic conditions it most likely is an example of a random mutation which hangs around because it is selected for in malarial environments.

Plants have developed toxins over evolutionary history, and one such class of toxins causes destruction of red blood cells. Mammals (among other animals) have developed enzymes that break down these toxins, and the breakdown products are now beneficial. In fact we call these toxins "antioxidants".

Ironically, the enzyme G6PD apparently plays a role in the life-cycle of the malaria parasite, as those who have this condition and who are infected by malaria typically carry lower parasite loads.

In the digital realm, the concept of evolutionary arms races has been around since about 1980, and are most commonly observed in the ongoing battle between computer viruses and antivirus products.

They also appear in the realm of high-frequency trading (HFT), although as no one is eager to produce manuals on how their specific systems work it is a little harder to see how.

Years ago we used to see stops getting busted on in-demand shares--which we soon recognized as a sign that the particular stocks targeted would soon see gains. It always happened during a quiet trading time, usually after about 11 in the morning, and suddenly all the bids would be hit until a massive stop-loss was triggered and picked up. I remember in 2002 seeing CDE-N knocked down 30% in a matter of minutes, followed by a massive pick-up of some sucker's stop-loss, followed by furious action as the price bounced back. That passed for HFT in those days.

One of the modern approaches to HFT is latency arbitrage, whereby some entities are able to see more up-to-date buy and sell orders than the general public sees, and use this info to either scoop up the market with an arbitraged advantage or withdraw orders only to replace them moments later at a higher price. For instance, you may be trying to buy shares in company ABC, but as there are differing time-delays for each of the markets on which you are seeking shares--as soon as your first order appears on an exchange, all other available share orders at your buy price are cancelled and resubmitted at a higher price.

Recently, RBC announced a new program called "Thor", meant to combat latency arbitrage. The idea was that RBC would monitor the latency for all markets and use that info to ensure their orders arrive on all markets simultaneously.

Well, what's an HF arbitrageur to do? Why not try quote stuffing? A large number of quotes on a single stock can slow down the reporting on an entire channel, so why not use it to vary the latencies by random factors, making it more difficult for a program like Thor to work. If the latency factors for each market starts varying randomly, choosing the appropriate lags for Thor becomes impossible.

As I am not an evolutionary biologist, I was thinking in particular of asymmetric races, in which competing organisms adopt different methods, rather than symmetric races.

My interest in such things stems from having a son (and many, many relatives) with G6PD deficiency, a relatively common enzyme deficiency--selected for in humans most likely because it confers some resistance to malaria. The chief drawback of this genetic condition is that eating certain foods (and medications) can cause massive destruction of red blood cells.

How does such a condition appear? Like most genetic conditions it most likely is an example of a random mutation which hangs around because it is selected for in malarial environments.

Plants have developed toxins over evolutionary history, and one such class of toxins causes destruction of red blood cells. Mammals (among other animals) have developed enzymes that break down these toxins, and the breakdown products are now beneficial. In fact we call these toxins "antioxidants".

Ironically, the enzyme G6PD apparently plays a role in the life-cycle of the malaria parasite, as those who have this condition and who are infected by malaria typically carry lower parasite loads.

In the digital realm, the concept of evolutionary arms races has been around since about 1980, and are most commonly observed in the ongoing battle between computer viruses and antivirus products.

They also appear in the realm of high-frequency trading (HFT), although as no one is eager to produce manuals on how their specific systems work it is a little harder to see how.

Years ago we used to see stops getting busted on in-demand shares--which we soon recognized as a sign that the particular stocks targeted would soon see gains. It always happened during a quiet trading time, usually after about 11 in the morning, and suddenly all the bids would be hit until a massive stop-loss was triggered and picked up. I remember in 2002 seeing CDE-N knocked down 30% in a matter of minutes, followed by a massive pick-up of some sucker's stop-loss, followed by furious action as the price bounced back. That passed for HFT in those days.

One of the modern approaches to HFT is latency arbitrage, whereby some entities are able to see more up-to-date buy and sell orders than the general public sees, and use this info to either scoop up the market with an arbitraged advantage or withdraw orders only to replace them moments later at a higher price. For instance, you may be trying to buy shares in company ABC, but as there are differing time-delays for each of the markets on which you are seeking shares--as soon as your first order appears on an exchange, all other available share orders at your buy price are cancelled and resubmitted at a higher price.

Recently, RBC announced a new program called "Thor", meant to combat latency arbitrage. The idea was that RBC would monitor the latency for all markets and use that info to ensure their orders arrive on all markets simultaneously.

Well, what's an HF arbitrageur to do? Why not try quote stuffing? A large number of quotes on a single stock can slow down the reporting on an entire channel, so why not use it to vary the latencies by random factors, making it more difficult for a program like Thor to work. If the latency factors for each market starts varying randomly, choosing the appropriate lags for Thor becomes impossible.

Saturday, July 2, 2011

Big trouble at the Fed

This is a classic 80s film destined to be remade into an economic thriller.

Our hero, Jack Burton, an eternally worldly innocent, is thrust into a dark world of supernatural economics.

"This here's the Pork Chop Express, and I tell ya--a guy would have to be crazy to

think that the global reserve currency could be completely unbacked"

In a New York back alley, mysterious lights and storms appear out of a clear blue sky. Dollar No Pan, an old, old currency, stricken by the curse of no backing, seeks his redemption by marrying a green-backed girl.

"The guys in the red turbans? Bond vigilantes. Somehow No Pan controls them.

Don't make a sound, Jack! These guys are animals!"

"Yes, Mr. Burton--when Nixon closed the gold window I was struck by the curse of no backing!

But once l marry a green-backed girl, I shall receive backing from the IMF via SDRs and rule the world from beyond the grave!"

For inexplicable reasons, the old geezer keeps turning into a floating, fleshless monstrosity with unearthly powers. He can't touch or be touched in this state (as he is backed by nothing), but dreams of continuing his role as the world reserve currency forever.

"Once I marry her, I will rule the world!"

There are those who would resist the plans of Dollar No Pan.

"Don't worry, Jack. We have been accumulating gold for years."

Jack Burton and friends infilitrate the No Pan estate attempting to rescue the green-backed girl and discover the truth behind the unbacked currency.

"Hey, I used to know this guy. He said he would bust those Goldman Sachs algos!"

"What's this? - "Black blood of the FED."

"You mean oil?" - "No! Black blood of the FED!"

"We want a gold-backed currency and we're willing to fight for it!"

"By the power of Alan Greenspan, I will defeat you!"

"No! I don't want to marry you! Try Ellen Brown!"

Subscribe to:

Posts (Atom)